3D Measurements and Analysis

SINTEF develops advanced, optical systems for the acquisition of 3D data as well as sophisticated methods and algorithms for 3D analysis.

Computer Vision involves the extraction and interpretation of information from 2- and 3-dimensional images and videos. It plays a key role in enabling autonomous systems to understand and interact with their surroundings. The Computer Vision group performs research within 2D- and 3D-image analysis, video analysis, robot vision, and machine learning. We develop new technologies and solutions for our industrial partners, enabling improved autonomy, quality, reliability, and safety. We have expertise with a large range of sensing modalities and technologies, including visual-spectrum cameras, UV, IR, X-ray, lidar, ultrasound, RGB-D and 3D imagers, and other custom optical sensors.

SINTEF develops advanced, optical systems for the acquisition of 3D data as well as sophisticated methods and algorithms for 3D analysis.

Image analysis is the extraction of meaningful information from two-dimensional (2D) or three-dimensional (3D) digital images using digital image processing techniques.

Smart design of sensors and onboard AI enables safer, more capable, and more efficient autonomy for unmanned ground vehicles and drones.

Automatically analyzing video to detect and determine temporal and spatial events has a multitude of use cases in a wide range of domains such as entertainment, health-care, retail, automotive, transport, home automation, flame and smoke detection...

The aim of robot vision is to make a wide range of robot platforms able to interact with the world around them through visual inputs.

The computer vision group develop AI-based vision systems based on extensive understanding of the image generation process and cutting-edge machine learning algorithms. We specializes in developing robust and trustworthy deep learning networks for a...

The DigiBygg project aims to achieve fast and cost-effective production of detailed digital building models, which will promote broader adoption of digital processes in the building sector.

Improve occupational health and safety conditions within the ship recycling industry. By leveraging state-of-the-art robotics, computer vision, and artificial intelligence systems, we aspire to revolutionize traditional methodologies while promoting...

DeepStruct will enable a significant increase of automation within logistics and automation through developing 3D cameras capable of imaging transparent and other challenging objects.

Robots need eyes also in space. In this project, we develop a high-resolution 3D camera for space applications. In addition to superb measurement qualities, ESA requires a compact, simple, robust and low-power design.

The vision for this project is to develop a fully digital process for inspection of indoor industrial assets based on an intelligent, tethered drone system that eliminates the need for human entry to confined and often dangerous spaces. The system...

Develop a system for automatic interpretation of sounds, facial expressions and body gestures of people who cannot speak.

The primary goal of the project is to develop a sensor suite and algorithms that will enable the detection and classification of critical faults – those that can cause power outages – even in poor visibility conditions.

Develop a compact and easy-to-use retinal camera for early screening of diabetic retinopathy.

The central R&D challenges within this project focus on the use of machine learning techniques and cloud-based services to deliver more user-friendly and robust 3D camera solutions.

The FeedCarrier project will develop an automatic feeding system that analyses, fills, and distributes feed fully automatically, thereby reducing manual labour and improving safety for the farmer as well as enabling precise control over the timing...

SMARTFISH H2020 is an international research project which aims to develop, test and promote a suite of high-tech systems for the EU fishing sector. The goal is to optimize resource efficiency, improve automatic data collection for fish stock...

We will enable scalability in number of robust high-quality assays to be developed for SpinChip's diagnostics platform by combining microfluidic simulations, advanced computer vision and state-of-the-art machine learning.

The primary objective of the DroneSAFE project was to develop a professional-quality cinematography solution for action and adventure sports in an affordable, safe, and easy-to-use consumer drone platform.

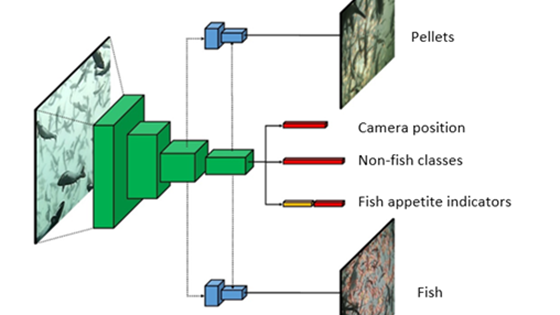

Good control when feeding farmed salmon in sea cages is essential. The growth potential is not met with too little feed, while too much feed is bad for both economy and environment.

Klassifisering av slakt skal være en objektiv vurdering av slaktet som er basert på sammensetningen av kjøtt, fett, og bein. Per i dag er klassifiseringen et viktig grunnlag for pris til bonde og industri. Prosjektet har utviklet instrumentering og...

A new subsea camera has been developed that can see two to three times further under water than existing cameras and calculate distances to objects. This will make work carried out under water much easier.

In Flexi3D we will develop new technologies that can meet industry needs: a rapid and precise pick, place & verify solution that enables profitable future automation solutions.

I3DS project intends to develop a generic modular Inspector Sensors Suite (INSES) which will be a smart collection of building blocks and a common set of various sensors answering the needs of near-future space exploration missions in terms of remote...

The goal of the "Meet easy" project was to provide a complete, easy-to-use videoconferencing solution delivering high-end features such as high-quality audio and video with a wide field of view – in a compact form and at a fraction of the cost...

Researchers have developed a robot that adjusts its movements in order to avoid colliding with the people and objects around it. This provides new opportunities for more friendly interaction between people and machines.

A 3D camera developed in Norway may be the first in the world that can film in all directions.

The robots of the future must be able to adapt to changes in their surroundings. Some of them will be in close contact with people. At the very least they must be able to see properly – in three dimensions, just like us.

The primary objective of this project is to develop new technology and processes for increased safety and efficiency in nuclear decommissioning in Norway and abroad.

PILOTING is aiming to increase the efficiency, safety and quality of inspection and maintenance activities. The primary application will be in civil infrastructures such as tunnels and bridges, as well as refineries.